Volume scan from multiple sweeps using xradar#

This example shows how to create a volume scan from multiple sweep files stored on AWS. The volume scan structure is based on tree-like hierarchical collection of xarray objects

Imports#

[1]:

import fsspec

import xradar as xd

import numpy as np

import cartopy.crs as ccrs

import cartopy.feature as cfeature

import matplotlib.pyplot as plt

import cmweather

import warnings

from pandas import to_datetime

from datetime import datetime

from matplotlib import pyplot

from datatree import DataTree, open_datatree

from matplotlib.animation import FuncAnimation

from IPython.display import HTML

warnings.simplefilter("ignore")

Access radar data from the Colombian radar network on AWS#

Access data from the IDEAM bucket on AWS. Detailed information can be found here

[2]:

def create_query(date, radar_site):

"""

Creates a string for quering the IDEAM radar files stored in AWS bucket

:param date: date to be queried. e.g datetime(2021, 10, 3, 12). Datetime python object

:param radar_site: radar site e.g. Guaviare

:return: string with a IDEAM radar bucket format

"""

if (date.hour != 0) and (date.hour != 0):

return f"l2_data/{date:%Y}/{date:%m}/{date:%d}/{radar_site}/{radar_site[:3].upper()}{date:%y%m%d%H}"

elif (date.hour != 0) and (date.hour == 0):

return f"l2_data/{date:%Y}/{date:%m}/{date:%d}/{radar_site}/{radar_site[:3].upper()}{date:%y%m%d}"

else:

return f"l2_data/{date:%Y}/{date:%m}/{date:%d}/{radar_site}/{radar_site[:3].upper()}{date:%y%m%d}"

[3]:

date_query = datetime(2023, 4, 7, 3)

radar_name = "Barrancabermeja"

query = create_query(date=date_query, radar_site=radar_name)

str_bucket = "s3://s3-radaresideam/"

fs = fsspec.filesystem("s3", anon=True)

[4]:

query

[4]:

'l2_data/2023/04/07/Barrancabermeja/BAR23040703'

[5]:

radar_files = sorted(fs.glob(f"{str_bucket}{query}*"))

radar_files[:4]

[5]:

['s3-radaresideam/l2_data/2023/04/07/Barrancabermeja/BAR230407030004.RAW0LZ9',

's3-radaresideam/l2_data/2023/04/07/Barrancabermeja/BAR230407030107.RAW0LZC',

's3-radaresideam/l2_data/2023/04/07/Barrancabermeja/BAR230407030238.RAW0LZE',

's3-radaresideam/l2_data/2023/04/07/Barrancabermeja/BAR230407030315.RAW0LZH']

Let’s check the elevation at each file using xradar.datatree module#

IDEAM radar network operates with a volume scan every five minutes. Each volume scan has four different tasks * SURVP “super-resolution” sweep at the lowest elevation angle, usually 0.5 deg, with 720 degrees in azimuth (every 0.5 deg) * PRECA task with 1.5, 2.4, 3.0, and 5.0 elevation angles and shorter range than SURVP * PRECB task with 6.4 and 8.0 elevation angles and a shorter range than the previous task * PRECC task with 10.0, 12.5, and 15.0 with a shorter range than all the previous tasks.

[6]:

# List of first four task files

task_files = [

fsspec.open_local(

f"simplecache::s3://{i}", s3={"anon": True}, filecache={"cache_storage": "."}

)

for i in radar_files[:4]

]

# list of xradar datatrees

ls_dt = [xd.io.open_iris_datatree(i).xradar.georeference() for i in task_files]

# sweeps and elevations within each task

for i in ls_dt:

sweeps = list(i.children.keys())

print(f"task sweeps: {sweeps}")

for j in sweeps:

if j.startswith("sweep"):

print(

f"{j}: {i[j].sweep_fixed_angle.values: .1f} [deg], {i[j].range.values[-1] / 1e3:.1f} [km]"

)

print("----------------------------------------------------------------")

task sweeps: ['radar_parameters', 'sweep_0']

sweep_0: 1.3 [deg], 298.9 [km]

----------------------------------------------------------------

task sweeps: ['radar_parameters', 'sweep_0', 'sweep_1', 'sweep_2', 'sweep_3']

sweep_0: 1.5 [deg], 224.8 [km]

sweep_1: 2.4 [deg], 224.8 [km]

sweep_2: 3.1 [deg], 224.8 [km]

sweep_3: 5.1 [deg], 224.8 [km]

----------------------------------------------------------------

task sweeps: ['radar_parameters', 'sweep_0', 'sweep_1']

sweep_0: 6.4 [deg], 175.0 [km]

sweep_1: 8.0 [deg], 175.0 [km]

----------------------------------------------------------------

task sweeps: ['radar_parameters', 'sweep_0', 'sweep_1', 'sweep_2']

sweep_0: 10.0 [deg], 99.0 [km]

sweep_1: 12.5 [deg], 99.0 [km]

sweep_2: 15.0 [deg], 99.0 [km]

----------------------------------------------------------------

Create a single-volume scan#

Let’s use the first four files, tasks SURVP, PRECA, PRECB, PRECC, to create a single volume scan using each task as a datatree. The new volume scan is a tree-like hierarchical object with all four tasks as children.

[7]:

vcp_dt = DataTree(

name="root",

children=dict(SURVP=ls_dt[0], PRECA=ls_dt[1], PRECB=ls_dt[2], PRECC=ls_dt[3]),

)

[8]:

vcp_dt.groups

[8]:

('/',

'/SURVP',

'/SURVP/radar_parameters',

'/SURVP/sweep_0',

'/PRECA',

'/PRECA/radar_parameters',

'/PRECA/sweep_0',

'/PRECA/sweep_1',

'/PRECA/sweep_2',

'/PRECA/sweep_3',

'/PRECB',

'/PRECB/radar_parameters',

'/PRECB/sweep_0',

'/PRECB/sweep_1',

'/PRECC',

'/PRECC/radar_parameters',

'/PRECC/sweep_0',

'/PRECC/sweep_1',

'/PRECC/sweep_2')

[9]:

print(f"Size of data in tree = {vcp_dt.nbytes / 1e6 :.2f} MB")

Size of data in tree = 204.23 MB

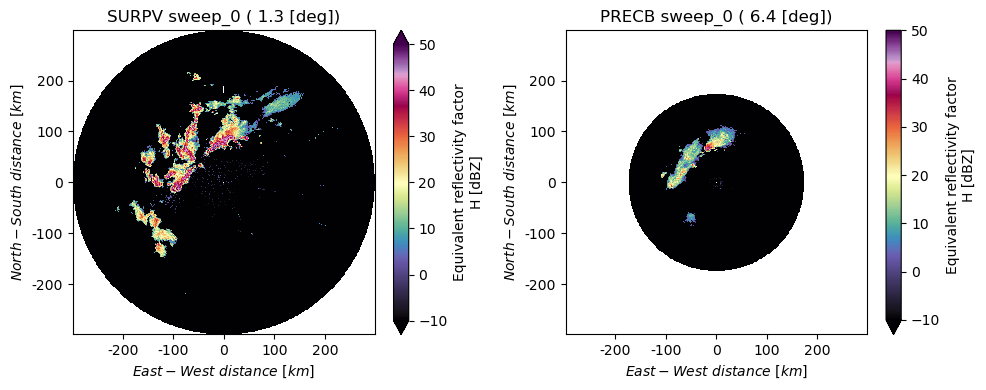

PPI plot from the Datatree object#

Now that we have a tree-like hierarchical volume scan object. We can access data at each scan/sweep using dot method vcp_dt.SURVP or dictionary-key method vcp_dt['PRECB']

[10]:

fig, (ax, ax1) = plt.subplots(1, 2, figsize=(10, 4))

# dot method

vcp_dt.SURVP.sweep_0.DBZH.plot(

x="x", y="y", cmap="ChaseSpectral", vmin=-10, vmax=50, ax=ax

)

ax.set_title(

f"SURPV sweep_0 ({vcp_dt.SURVP.sweep_0.sweep_fixed_angle.values: .1f} [deg])"

)

m2km = lambda x, _: f"{x/1000:g}"

ax.xaxis.set_major_formatter(m2km)

ax.yaxis.set_major_formatter(m2km)

ax.set_ylabel("$North - South \ distance \ [km]$")

ax.set_xlabel("$East - West \ distance \ [km]$")

# Dictionary-key method

vcp_dt["PRECB"]["sweep_0"].DBZH.plot(

x="x", y="y", cmap="ChaseSpectral", vmin=-10, vmax=50, ax=ax1

)

ax1.set_title(

f"PRECB sweep_0 ({vcp_dt.PRECB.sweep_0.sweep_fixed_angle.values: .1f} [deg])"

)

m2km = lambda x, _: f"{x/1000:g}"

ax1.xaxis.set_major_formatter(m2km)

ax1.yaxis.set_major_formatter(m2km)

ax1.set_xlim(ax.get_xlim())

ax1.set_ylim(ax.get_ylim())

ax1.set_ylabel("$North - South \ distance \ [km]$")

ax1.set_xlabel("$East - West \ distance \ [km]$")

fig.tight_layout()

Multiple volumes scan into one datatree object#

Similarly, we can create a tree-like hierarchical object with multiple volume scans.

[11]:

def data_accessor(file):

"""

Open AWS S3 file(s), which can be resolved locally by file caching

"""

return fsspec.open_local(

f"simplecache::s3://{file}",

s3={"anon": True},

filecache={"cache_storage": "./tmp/"},

)

def create_vcp(ls_dt):

"""

Creates a tree-like object for each volume scan

"""

return DataTree(

name="root",

children=dict(SURVP=ls_dt[0], PRECA=ls_dt[1], PRECB=ls_dt[2], PRECC=ls_dt[3]),

)

def mult_vcp(radar_files):

"""

Creates a tree-like object for multiple volumes scan every 4th file in the bucket

"""

ls_files = [radar_files[i : i + 4] for i in range(len(radar_files)) if i % 4 == 0]

ls_sigmet = [

[xd.io.open_iris_datatree(data_accessor(i)).xradar.georeference() for i in j]

for j in ls_files

]

ls_dt = [create_vcp(i) for i in ls_sigmet]

return DataTree.from_dict({f"vcp_{idx}": i for idx, i in enumerate(ls_dt)})

[12]:

# let's test it using the first 24 files in the bucket. We can include more files for visualization. e.g. radar_files[:96]

vcps_dt = mult_vcp(radar_files[:24])

Now we have 6 vcps in one tree-like hierarchical object.

[13]:

list(vcps_dt.keys())

[13]:

['vcp_0', 'vcp_1', 'vcp_2', 'vcp_3', 'vcp_4', 'vcp_5']

[14]:

print(f"Size of data in tree = {vcps_dt.nbytes / 1e9 :.2f} GB")

Size of data in tree = 1.23 GB

PPI animation using the lowest elevation angle#

We can create an animation using the FuncAnimation module from matplotlib package

[15]:

fig, ax = plt.subplots(subplot_kw={"projection": ccrs.PlateCarree()})

proj_crs = xd.georeference.get_crs(vcps_dt.vcp_1.SURVP)

cart_crs = ccrs.Projection(proj_crs)

sc = vcps_dt.vcp_1.SURVP.sweep_0.DBZH.plot.pcolormesh(

x="x",

y="y",

vmin=-10,

vmax=50,

cmap="ChaseSpectral",

edgecolors="face",

transform=cart_crs,

ax=ax,

)

title = f"SURVP - {vcps_dt.vcp_1.SURVP.sweep_0.sweep_fixed_angle.values: .1f} [deg]"

ax.set_title(title)

gl = ax.gridlines(

crs=ccrs.PlateCarree(),

draw_labels=True,

linewidth=1,

color="gray",

alpha=0.3,

linestyle="--",

)

plt.gca().xaxis.set_major_locator(plt.NullLocator())

gl.top_labels = False

gl.right_labels = False

ax.coastlines()

def update_plot(vcp):

sc.set_array(vcps_dt[vcp].SURVP.sweep_0.DBZH.values.ravel())

ani = FuncAnimation(fig, update_plot, frames=list(vcps_dt.keys()), interval=150)

plt.close()

HTML(ani.to_html5_video())

[15]:

Bonus!!#

Analysis-ready data, cloud-optimized (ARCO) format#

Tree-like hierarchical data can be stored using ARCO format.

[16]:

zarr_store = "./multiple_vcp_test.zarr"

_ = vcps_dt.to_zarr(zarr_store)

ARCO format can be read by using open_datatree module

[17]:

vcps_back = open_datatree(zarr_store, engine="zarr")

[18]:

display(vcps_back)

<xarray.DatasetView> Size: 0B

Dimensions: ()

Data variables:

*empty*- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

<xarray.DatasetView> Size: 24B Dimensions: () Coordinates: altitude float64 8B ... latitude float64 8B ... longitude float64 8B ... Data variables: *empty*radar_parameters- azimuth: 360

- range: 747

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.05493 1.057 2.038 ... 358.1 359.0

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([5.493164e-02, 1.057434e+00, 2.037964e+00, ..., 3.570612e+02, 3.580554e+02, 3.590497e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.3e+03 ... 2.248e+05

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 15000.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1300., 1600., ..., 224200., 224500., 224800.], dtype=float32) - time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[268920 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[268920 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[268920 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[268920 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[268920 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[268920 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[268920 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 19MB Dimensions: (azimuth: 360, range: 747) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.05493 1.057 2.038 ... 358.1 359.0 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 3kB 1e+03 1.3e+03 ... 2.245e+05 2.248e+05 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 1MB ... DBZH (azimuth, range) float32 1MB ... DB_DBTE8 (azimuth, range) float32 1MB ... DB_DBZE8 (azimuth, range) float32 1MB ... DB_HCLASS (azimuth, range) int16 538kB ... KDP (azimuth, range) float32 1MB ... ... ... ZDR (azimuth, range) float32 1MB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_0- azimuth: 360

- range: 747

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.0412 1.044 2.038 ... 358.1 359.0

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([4.119873e-02, 1.043701e+00, 2.037964e+00, ..., 3.570612e+02, 3.580527e+02, 3.590332e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.3e+03 ... 2.248e+05

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 15000.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1300., 1600., ..., 224200., 224500., 224800.], dtype=float32) - time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[268920 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[268920 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[268920 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[268920 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[268920 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[268920 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[268920 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 19MB Dimensions: (azimuth: 360, range: 747) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.0412 1.044 2.038 ... 358.1 359.0 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 3kB 1e+03 1.3e+03 ... 2.245e+05 2.248e+05 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 1MB ... DBZH (azimuth, range) float32 1MB ... DB_DBTE8 (azimuth, range) float32 1MB ... DB_DBZE8 (azimuth, range) float32 1MB ... DB_HCLASS (azimuth, range) int16 538kB ... KDP (azimuth, range) float32 1MB ... ... ... ZDR (azimuth, range) float32 1MB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_1- azimuth: 360

- range: 747

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.06042 1.041 2.049 ... 358.1 359.0

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([6.042480e-02, 1.040955e+00, 2.048950e+00, ..., 3.570529e+02, 3.580554e+02, 3.590469e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.3e+03 ... 2.248e+05

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 15000.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1300., 1600., ..., 224200., 224500., 224800.], dtype=float32) - time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[268920 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[268920 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[268920 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[268920 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[268920 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[268920 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[268920 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 19MB Dimensions: (azimuth: 360, range: 747) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.06042 1.041 2.049 ... 358.1 359.0 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 3kB 1e+03 1.3e+03 ... 2.245e+05 2.248e+05 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 1MB ... DBZH (azimuth, range) float32 1MB ... DB_DBTE8 (azimuth, range) float32 1MB ... DB_DBZE8 (azimuth, range) float32 1MB ... DB_HCLASS (azimuth, range) int16 538kB ... KDP (azimuth, range) float32 1MB ... ... ... ZDR (azimuth, range) float32 1MB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_2- azimuth: 360

- range: 747

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.05768 1.038 2.027 ... 358.1 359.1

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([5.767822e-02, 1.038208e+00, 2.026978e+00, ..., 3.570612e+02, 3.580527e+02, 3.590634e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.3e+03 ... 2.248e+05

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 15000.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1300., 1600., ..., 224200., 224500., 224800.], dtype=float32) - time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[268920 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[268920 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[268920 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[268920 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[268920 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[268920 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[268920 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[268920 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[268920 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[268920 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[268920 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 19MB Dimensions: (azimuth: 360, range: 747) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.05768 1.038 2.027 ... 358.1 359.1 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 3kB 1e+03 1.3e+03 ... 2.245e+05 2.248e+05 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 1MB ... DBZH (azimuth, range) float32 1MB ... DB_DBTE8 (azimuth, range) float32 1MB ... DB_DBZE8 (azimuth, range) float32 1MB ... DB_HCLASS (azimuth, range) int16 538kB ... KDP (azimuth, range) float32 1MB ... ... ... ZDR (azimuth, range) float32 1MB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_3- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- instrument_type()<U5...

[1 values with dtype=<U5]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- platform_type()<U5...

[1 values with dtype=<U5]

- time_coverage_end()<U20...

[1 values with dtype=<U20]

- time_coverage_start()<U20...

[1 values with dtype=<U20]

- volume_number()int64...

[1 values with dtype=int64]

- Conventions :

- None

- comment :

- im/exported using xradar

- history :

- None

- institution :

- None

- instrument_name :

- None

- references :

- None

- source :

- None

- title :

- None

- version :

- None

<xarray.DatasetView> Size: 232B Dimensions: () Data variables: altitude float64 8B ... instrument_type <U5 20B ... latitude float64 8B ... longitude float64 8B ... platform_type <U5 20B ... time_coverage_end <U20 80B ... time_coverage_start <U20 80B ... volume_number int64 8B ... Attributes: Conventions: None comment: im/exported using xradar history: None institution: None instrument_name: None references: None source: None title: None version: NonePRECA- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

<xarray.DatasetView> Size: 24B Dimensions: () Coordinates: altitude float64 8B ... latitude float64 8B ... longitude float64 8B ... Data variables: *empty*radar_parameters- azimuth: 360

- range: 581

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.05219 1.033 2.049 ... 358.0 359.1

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([5.218506e-02, 1.032715e+00, 2.048950e+00, ..., 3.570502e+02, 3.580444e+02, 3.590552e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.3e+03 ... 1.75e+05

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 15000.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1300., 1600., ..., 174400., 174700., 175000.], dtype=float32) - time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[209160 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[209160 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[209160 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[209160 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[209160 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[209160 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[209160 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[209160 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[209160 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[209160 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[209160 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[209160 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[209160 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[209160 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[209160 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 15MB Dimensions: (azimuth: 360, range: 581) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.05219 1.033 2.049 ... 358.0 359.1 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 2kB 1e+03 1.3e+03 ... 1.747e+05 1.75e+05 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 837kB ... DBZH (azimuth, range) float32 837kB ... DB_DBTE8 (azimuth, range) float32 837kB ... DB_DBZE8 (azimuth, range) float32 837kB ... DB_HCLASS (azimuth, range) int16 418kB ... KDP (azimuth, range) float32 837kB ... ... ... ZDR (azimuth, range) float32 837kB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_0- azimuth: 360

- range: 581

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.03021 1.074 2.063 ... 358.1 359.1

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([3.021240e-02, 1.073914e+00, 2.062683e+00, ..., 3.570584e+02, 3.580637e+02, 3.590552e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.3e+03 ... 1.75e+05

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 15000.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1300., 1600., ..., 174400., 174700., 175000.], dtype=float32) - time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[209160 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[209160 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[209160 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[209160 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[209160 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[209160 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[209160 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[209160 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[209160 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[209160 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[209160 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[209160 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[209160 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[209160 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[209160 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 15MB Dimensions: (azimuth: 360, range: 581) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.03021 1.074 2.063 ... 358.1 359.1 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 2kB 1e+03 1.3e+03 ... 1.747e+05 1.75e+05 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 837kB ... DBZH (azimuth, range) float32 837kB ... DB_DBTE8 (azimuth, range) float32 837kB ... DB_DBZE8 (azimuth, range) float32 837kB ... DB_HCLASS (azimuth, range) int16 418kB ... KDP (azimuth, range) float32 837kB ... ... ... ZDR (azimuth, range) float32 837kB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_1- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- instrument_type()<U5...

[1 values with dtype=<U5]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- platform_type()<U5...

[1 values with dtype=<U5]

- time_coverage_end()<U20...

[1 values with dtype=<U20]

- time_coverage_start()<U20...

[1 values with dtype=<U20]

- volume_number()int64...

[1 values with dtype=int64]

- Conventions :

- None

- comment :

- im/exported using xradar

- history :

- None

- institution :

- None

- instrument_name :

- None

- references :

- None

- source :

- None

- title :

- None

- version :

- None

<xarray.DatasetView> Size: 232B Dimensions: () Data variables: altitude float64 8B ... instrument_type <U5 20B ... latitude float64 8B ... longitude float64 8B ... platform_type <U5 20B ... time_coverage_end <U20 80B ... time_coverage_start <U20 80B ... volume_number int64 8B ... Attributes: Conventions: None comment: im/exported using xradar history: None institution: None instrument_name: None references: None source: None title: None version: NonePRECB- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

<xarray.DatasetView> Size: 24B Dimensions: () Coordinates: altitude float64 8B ... latitude float64 8B ... longitude float64 8B ... Data variables: *empty*radar_parameters- azimuth: 360

- range: 654

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.03845 0.9558 ... 358.0 359.0

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([3.845215e-02, 9.558105e-01, 2.024231e+00, ..., 3.570255e+02, 3.580417e+02, 3.590277e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.15e+03 ... 9.895e+04

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 7500.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1150., 1300., ..., 98650., 98800., 98950.], dtype=float32)

- time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[235440 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[235440 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[235440 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[235440 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[235440 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[235440 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[235440 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[235440 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[235440 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[235440 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[235440 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[235440 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[235440 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[235440 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[235440 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 16MB Dimensions: (azimuth: 360, range: 654) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.03845 0.9558 ... 358.0 359.0 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 3kB 1e+03 1.15e+03 ... 9.88e+04 9.895e+04 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 942kB ... DBZH (azimuth, range) float32 942kB ... DB_DBTE8 (azimuth, range) float32 942kB ... DB_DBZE8 (azimuth, range) float32 942kB ... DB_HCLASS (azimuth, range) int16 471kB ... KDP (azimuth, range) float32 942kB ... ... ... ZDR (azimuth, range) float32 942kB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_0- azimuth: 360

- range: 654

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.04395 1.046 2.052 ... 358.0 359.0

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([4.394531e-02, 1.046448e+00, 2.051697e+00, ..., 3.570227e+02, 3.580444e+02, 3.590469e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563

- latitude_of_projection_origin :

- 6.932760123163462

- longitude_of_prime_meridian :

- 0.0

- longitude_of_projection_origin :

- -73.76250995323062

- prime_meridian_name :

- Greenwich

- projected_crs_name :

- unknown

- reference_ellipsoid_name :

- WGS 84

- semi_major_axis :

- 6378137.0

- semi_minor_axis :

- 6356752.314245179

[1 values with dtype=int64]

- elevation(azimuth)float32...

- axis :

- radial_elevation_coordinate

- long_name :

- elevation_angle_from_horizontal_plane

- standard_name :

- ray_elevation_angle

- units :

- degrees

[360 values with dtype=float32]

- latitude()float64...

- long_name :

- latitude

- positive :

- up

- standard_name :

- latitude

- units :

- degrees_north

[1 values with dtype=float64]

- longitude()float64...

- long_name :

- longitude

- standard_name :

- longitude

- units :

- degrees_east

[1 values with dtype=float64]

- range(range)float321e+03 1.15e+03 ... 9.895e+04

- axis :

- radial_range_coordinate

- long_name :

- range_to_measurement_volume

- meters_between_gates :

- 7500.0

- meters_to_center_of_first_gate :

- 100000

- spacing_is_constant :

- true

- standard_name :

- projection_range_coordinate

- units :

- meters

array([ 1000., 1150., 1300., ..., 98650., 98800., 98950.], dtype=float32)

- time(azimuth)datetime64[ns]...

- standard_name :

- time

[360 values with dtype=datetime64[ns]]

- x(azimuth, range)float64...

- standard_name :

- east_west_distance_from_radar

- units :

- meters

[235440 values with dtype=float64]

- y(azimuth, range)float64...

- standard_name :

- north_south_distance_from_radar

- units :

- meters

[235440 values with dtype=float64]

- z(azimuth, range)float64...

- standard_name :

- height_above_ground

- units :

- meters

[235440 values with dtype=float64]

- DBTH(azimuth, range)float32...

- long_name :

- Total power H (uncorrected reflectivity)

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[235440 values with dtype=float32]

- DBZH(azimuth, range)float32...

- long_name :

- Equivalent reflectivity factor H

- standard_name :

- radar_equivalent_reflectivity_factor_h

- units :

- dBZ

[235440 values with dtype=float32]

- DB_DBTE8(azimuth, range)float32...

[235440 values with dtype=float32]

- DB_DBZE8(azimuth, range)float32...

[235440 values with dtype=float32]

- DB_HCLASS(azimuth, range)int16...

[235440 values with dtype=int16]

- KDP(azimuth, range)float32...

- long_name :

- Specific differential phase HV

- standard_name :

- radar_specific_differential_phase_hv

- units :

- degrees per kilometer

[235440 values with dtype=float32]

- PHIDP(azimuth, range)float32...

- long_name :

- Differential phase HV

- standard_name :

- radar_differential_phase_hv

- units :

- degrees

[235440 values with dtype=float32]

- RHOHV(azimuth, range)float32...

- long_name :

- Correlation coefficient HV

- standard_name :

- radar_correlation_coefficient_hv

- units :

- unitless

[235440 values with dtype=float32]

- SQIH(azimuth, range)float32...

- long_name :

- Signal Quality H

- standard_name :

- signal_quality_index_h

- units :

- unitless

[235440 values with dtype=float32]

- VRADH(azimuth, range)float32...

- long_name :

- Radial velocity of scatterers away from instrument H

- standard_name :

- radial_velocity_of_scatterers_away_from_instrument_h

- units :

- meters per seconds

[235440 values with dtype=float32]

- WRADH(azimuth, range)float32...

- long_name :

- Doppler spectrum width H

- standard_name :

- radar_doppler_spectrum_width_h

- units :

- meters per seconds

[235440 values with dtype=float32]

- ZDR(azimuth, range)float32...

- long_name :

- Log differential reflectivity H/V

- standard_name :

- radar_differential_reflectivity_hv

- units :

- dB

[235440 values with dtype=float32]

- follow_mode()<U7...

[1 values with dtype=<U7]

- prt_mode()<U7...

[1 values with dtype=<U7]

- sweep_fixed_angle()float64...

[1 values with dtype=float64]

- sweep_mode()<U20...

[1 values with dtype=<U20]

- sweep_number()int64...

[1 values with dtype=int64]

<xarray.DatasetView> Size: 16MB Dimensions: (azimuth: 360, range: 654) Coordinates: altitude float64 8B ... * azimuth (azimuth) float32 1kB 0.04395 1.046 2.052 ... 358.0 359.0 crs_wkt int64 8B ... elevation (azimuth) float32 1kB ... latitude float64 8B ... longitude float64 8B ... * range (range) float32 3kB 1e+03 1.15e+03 ... 9.88e+04 9.895e+04 time (azimuth) datetime64[ns] 3kB ... x (azimuth, range) float64 2MB ... y (azimuth, range) float64 2MB ... z (azimuth, range) float64 2MB ... Data variables: (12/17) DBTH (azimuth, range) float32 942kB ... DBZH (azimuth, range) float32 942kB ... DB_DBTE8 (azimuth, range) float32 942kB ... DB_DBZE8 (azimuth, range) float32 942kB ... DB_HCLASS (azimuth, range) int16 471kB ... KDP (azimuth, range) float32 942kB ... ... ... ZDR (azimuth, range) float32 942kB ... follow_mode <U7 28B ... prt_mode <U7 28B ... sweep_fixed_angle float64 8B ... sweep_mode <U20 80B ... sweep_number int64 8B ...sweep_1- azimuth: 360

- range: 654

- altitude()float64...

- long_name :

- altitude

- standard_name :

- altitude

- units :

- meters

[1 values with dtype=float64]

- azimuth(azimuth)float320.04395 1.033 2.063 ... 358.1 359.1

- axis :

- radial_azimuth_coordinate

- long_name :

- azimuth_angle_from_true_north

- standard_name :

- ray_azimuth_angle

- units :

- degrees

array([4.394531e-02, 1.032715e+00, 2.062683e+00, ..., 3.570392e+02, 3.580554e+02, 3.590579e+02], dtype=float32) - crs_wkt()int64...

- crs_wkt :

- PROJCRS["unknown",BASEGEOGCRS["unknown",DATUM["World Geodetic System 1984",ELLIPSOID["WGS 84",6378137,298.257223563,LENGTHUNIT["metre",1]],ID["EPSG",6326]],PRIMEM["Greenwich",0,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8901]]],CONVERSION["unknown",METHOD["Modified Azimuthal Equidistant",ID["EPSG",9832]],PARAMETER["Latitude of natural origin",6.93276012316346,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8801]],PARAMETER["Longitude of natural origin",-73.7625099532306,ANGLEUNIT["degree",0.0174532925199433],ID["EPSG",8802]],PARAMETER["False easting",0,LENGTHUNIT["metre",1],ID["EPSG",8806]],PARAMETER["False northing",0,LENGTHUNIT["metre",1],ID["EPSG",8807]]],CS[Cartesian,2],AXIS["(E)",east,ORDER[1],LENGTHUNIT["metre",1,ID["EPSG",9001]]],AXIS["(N)",north,ORDER[2],LENGTHUNIT["metre",1,ID["EPSG",9001]]]]

- false_easting :

- 0.0

- false_northing :

- 0.0

- geographic_crs_name :

- unknown

- grid_mapping_name :

- azimuthal_equidistant

- horizontal_datum_name :

- World Geodetic System 1984

- inverse_flattening :

- 298.257223563